I made a chatbot for a bank a decade ago and one answer was getting terrible ratings from users. I looked up the questions being asked that lead to the answer and they should have gone to that answer. I checked the answer in case it was rude, unclear or missing details and it was not.

The 'bad' answer was not wrong it was just what people did not want to hear. It was telling people they were not qualified for an immediate loan and giving details of how they could try a slower method to get a loan. The answer was not wrong just not what people wanted to hear.

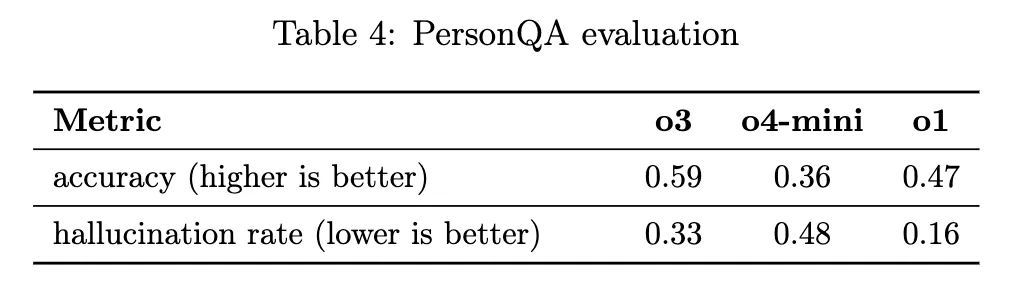

LLMs have gotten so good at telling us what we want now that they just make stuff up more than they used to. There is a great article here on the increasing misalignment. Hee hallucination growing is an indication the LLM is making stuff up to make us happier.

We should reward correctness (including in the steps getting to the answer) than people liking the answer. But the incentives of the testing mechanism and more seriously the companies making LLMs do not do this. If users prefer LLMs that tell them they are great and give plausible sounding reasons why they should do what they want to do these will be more popular.

Dirk Gently's Holistic Detective Agency by Douglas Adams has a program called REASON that LLMs are turning into. It which would take any conclusion you gave it and construct a plausible series of logical steps to get there.

They will give us a series of plausible sounding steps that will make us happy. It is us who are misaligned not just the LLMs