Friday, March 22, 2019

Ireland Population Density Maps

Wednesday, March 20, 2019

Heatmap of the world

The picture as I said in the first comment on the post was inspired by Ed Hawkins spiral animation of world temperatures and was an attempt to show everything in one picture.

A few outlets picked up the picture. The weather channel put it on facebook without crediting me.

Tableau I found out last year use it to advertise themselves but with no credit to me the original design

Ed Hawkins tweeted about the graph

And in June 2016 we discussed further as I switched Time to the Y axis and made other changes. My point is he tweeted about "'heat-map' representation of global temperature changes since 1850" two years before he published his heat map representation (using stripes). My graphs used the Hadcrut-4 and HadCET datasets

In May 2018 he produced a climate graph (below) with only years not months in the heatmap. At no point has he said that my heatmaps (which were inspired by his global temperatures spiral animation) might have inspired him.

I had produced a heatmap graph with lines for years that he saw in Sept 2017

Hawkins graph has deservidely become famous. And heatmaps are one of the most common visualisation types, which is evidence he could have come up with it on his own anyway. I would like if my ones did inspire him that it got mentioned somewhere. But the big deal here is that global warming is being shown in a popular eye catching way.

Monday, February 25, 2019

February is Hot

Central England has weather data going back a long time. 1772 for daily average temperature and 1878 for maximum and minimum temperatures on each day.

2 times February the 25th since 1772 had an average temperature for the day above 10°C in Central England 1790 with 10.7°C, and 1922 with 11°C

The maximum temperatures observed in central England on the 25th of February were 1922 with 14.1,1953 with 13.1 and 1976 with 13.1 For those 140 years the maximum daily temperature averaged 6.6C.

I put the code to work all this out here. Graph of Feb 25th max temperature shows how weird 20°C is

It takes a while for the HADCET data to be updated. There is a nearby weatherstation http://www.weathercast.co.uk/world-weather/weather-stations/obsid/99060.html when I figure out the relationship between the two it will be possible to do a comparison between today and all the previous years.

Tuesday, February 05, 2019

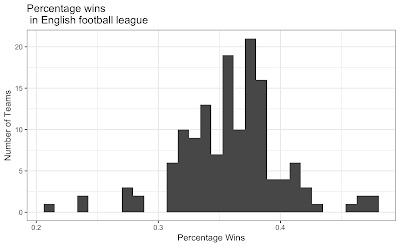

English soccer is not normal

Baseball wins seem not to follow a normal distribution

There is a great R Package dataset of football results by James Curley here. This engsoccerdata has a function to generate soccer league tables of many countries over a long time period.

league<-maketable_all(df=england[,])

team GP W D L gf ga gd Pts Pos

1 Manchester United 962 604 209 149 1856 847 1009 2021 1

and create a new column for the percentage of wins

league<-league %>%

mutate(PercentW = W / GP)

p<-ggplot(data=league, aes(league$PercentW)) + geom_histogram()

#binwidth=20

p<-p + ggtitle("Percentage wins\n in English football league") + xlab("Percentage Wins") + ylab("Number of Teams")

p<-p+theme_update(plot.title = element_text(hjust = 0.5))

p<-p + theme_bw()

library(fitdistrplus)

library(logspline)

x<-league$PercentW

fit.norm <- fitdist(x, "norm")

plot(fit.norm)

shapiro.test(x)

Shapiro-Wilk normality test

data: x W = 0.96276, p-value = 0.0006663 Which means English football wins really do not have a normal distribution.

Goals per game are also not normally distributed. But I dont think anyone expectes them to be

league<-league %>%

mutate(GoalsPgame = gf / GP)

shapiro.test(league$GoalsPgame)

Shapiro-Wilk normality test data: x W = 0.92134, p-value = 4.818e-07

And for France

Shapiro-Wilk normality test

data: leagueF$PercentW

W = 0.98522, p-value = 0.4699 so French football wins do not have might have (thanks for Paulfor the correction in the comments) a normal distribution. I must check the other leagues in the dataset as behaviour this different is odd.

Friday, January 13, 2017

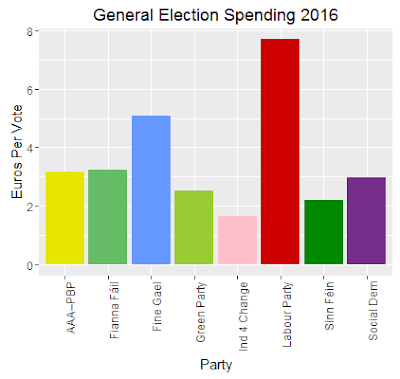

Irish Election Spending 2016

8394832.89 total spending (report here) Electorate: 3305110 so €2.50 was spent on each vote. That is under half what is spend on a US presidential vote.

On a per seat and per vote basis

And on a Per Seat Basis

Party,"Votes,1st pref.",Seats,Spending Fine Gael,544140,50,2768881.50 Fianna Fáil,519356,44,1687916.29 Sinn Féin,295319,23,650190.38 Labour Party,140898,7,1083718.38 AAA–PBP,84168,6,266942.48 Ind 4 Change,31365,4,51669.18 Social Dem,64094,3,190586.93 Green Party,57999,2,146792.27and the r package code is

data <- read.csv("spending.csv", header=TRUE)

datat <- mutate(data, perV = Spending/Votes.1st.pref., perS= Spending/Seats)

q<- ggplot(data=datat, aes(x=Party, y=perV, fill=Party)) + geom_bar(stat="identity") + scale_fill_manual(values=c("#E5E500", "#66BB66", "#6699FF", "#99CC33", "#FFC0CB","#CC0000", "#008800", "#752F8B"))

q <-q + theme(axis.text.x = element_text(angle = 90, hjust = 1))

q <-q + theme(legend.position="none")

q <-q + labs(title = "General Election Spending 2016")

q <-q + labs(y = "Euros Per Vote")

q<- ggplot(data=datat, aes(x=Party, y=perS, fill=Party)) + geom_bar(stat="identity") + scale_fill_manual(values=c("#E5E500", "#66BB66", "#6699FF", "#99CC33", "#FFC0CB","#CC0000", "#008800", "#752F8B"))

q <-q + theme(axis.text.x = element_text(angle = 90, hjust = 1))

q <-q + theme(legend.position="none")

q <-q + labs(title = "General Election Spending 2016")

q <-q + labs(y = "Euros Per Seat")

Thursday, May 19, 2016

Dying at Work in the US

The data contains the date, location and a description for 4000 fatalities over five years. I created columns for state, zipcode, number of people and cause.

The most common interesting words in these descriptions are

- 813 fell

- 708 struck

- 642 truck

- 452 falling

- 382 crushed

- 352 head

- 263 roof

- 261 tree

- 258 electrocuted

- 244 ladder

- 238 vehicle

- 226 trailer

- 197 machine

- 186 collapsed

- 180 forklift

Not common but interesting

- 10 lightning

- 48 shot

- 4 dog

- 2 bees

and here is a map I made of the states where they happen

I have created a repository to try augment the OSHA data and clean it up when errors are found.

The repository is on github here.

If you use it I'll give you edit rights and you can help improve it

Friday, January 22, 2016

England's Temperature in 2015

England has a collected data on daily temperature from 1772 in the Hadley Centre Central England Temperature (HadCET) dataset.

I downloaded this Hadley Centre dataset. And I followed this tutorial. Based on an original graphic by Tufte.

Here the black line is the average temerature for each day last year. The dark line in the middle is the average average temperature (95% confidence). the staw coloured bigger lines represent the highest and lowest average daily temperature ever recorded on that day since 1772. the red dots are the days in 2015 that were hotter than any other day at that time of year since 1772.

Looking at the black line that represents last years temperatures it was the Winter and Autumn that were far above average. Instead of a scorching hot summer most of the record hot days were in November and December. 2014 had the same pattern of a hot Winter. No day in 2015 was the coldest for that date in the recorded time.

Tuesday, November 17, 2015

How Good Will the Upcoming Stephen King Adaptations be?

According to IMDB the Stephen King Novels below are currently being adapted

| Title | good doubled |

|---|---|

| The Talisman | 8.2 |

| Rose Madder | 7.22 |

| Lisey's Story | 7.22 |

| Mr. Mercedes | 7.74 |

| Gerald's Game | 6.86 |

| Cell | 7.22 |

| 11.22.63 | 8.52 |

| The Dark Tower | 8 |

| Title | estimate | lower bound | upper bound |

|---|---|---|---|

| The Talisman | 7.5 | 6.4 | 8.6 |

| Rose Madder | 5.95 | 4.8 | 7.0 |

| Lisey's Story | 5.95 | 4.8 | 7.0 |

| Mr. Mercedes | 6.79 | 5.7 | 8.8 |

| Gerald's Game | 5.37 | 4.2 | 6.4 |

| Cell | 5.95 | 4.8 | 7.0 |

| 11.22.63 | 8.05 | 6.9 | 9.15 |

| The Dark Tower | 7.2 | 6.1 | 8.2 |

There is an interesting 538 podcast here about a company that predicts film earnings. He mentions the correlation between film quality and earnings and film quality. This whole topic is an interesting challenge for prediction.

Stephen King is unique among authors in the number and variety of adaptations his works have gone through. So he is possibly the only author this could be even tried with. I am really looking forward to 11.22.63 and the Talisman now. And if Gerald's Game beats the predicted IMDB score that is a bonus.

Code

mydata = read.csv("King.csv")

library(ggplot2)

attach(mydata) # attach the data frame

king.lm = lm(imdb ~ good.doubled)

Call:

lm(formula = imdb ~ good.doubled)

Coefficients:

(Intercept) good.doubled

-5.821 1.628

upcoming = read.csv("upcoming.csv")

predict(king.lm, upcoming, interval="predict")

> predict(king.lm, upcoming, interval="predict")

Title,Publication date,Pages,imdb,goodreads,good doubled,clr

Carrie,05/04/1974,199,7.4,3.89,7.78,1

Salem's Lot,17/10/1975,439,6.8,3.97,7.94,1

The Shining,28/01/1977,447,8.4,4.12,8.24,1

The Stand,Sep-78,823,7.3,4.32,8.64,1

The Dead Zone,Aug-79,428,7.3,3.88,7.76,1

Firestarter,29/09/1980,426,6,3.8,7.6,1

Cujo,08/09/1981,319,6,3.61,7.22,1

The Running Man,May-82,219,6.6,3.74,7.48,1

Christine,29/04/1983,526,6.6,3.69,7.38,1

Pet Sematary,14/11/1983,374,6.6,3.86,7.72,1

Thinner,19/11/1984,309,5.7,3.6,7.2,1

It,15/09/1986,1138,6.9,4.12,8.24,1

Misery,08/06/1987,310,7.8,4.06,8.12,1

The Tommyknockers,10/11/1987,558,5.4,3.42,6.84,1

The Dark Half,20/10/1989,431,5.9,3.71,7.42,1

Needful Things,Oct-91,690,6.2,3.84,7.68,1

Dolores Claiborne,Nov-92,305,7.4,3.76,7.52,1

The Green Mile,March–August 1996,400,8.5,4.39,8.78,1

Bag of Bones,22/09/1998,529,5.8,3.84,7.68,1

Dreamcatcher,20/03/2001,620,5.5,3.53,7.06,1

Under the Dome,10/11/2009,1074,6.8,3.89,7.78,1

Shawshank Redemption,10/11/2009,181,9.3,4.51,9.02,1

Stand by me,10/11/2009,80,8.1,4.25,8.5,1

The Mist,10/11/2009,230,7.2,3.88,7.76,1

The Langoliers,10/11/2009,230,6.1,3.71,7.42,1

Apt Pupil,1983,179,6.7,3.8,7.7,1

Hearts in Atlantis,2000,640,6.9,3.77,7.54,1

The Talisman,na,na,7.5,4.1, 8.2,2

Rose Madder,na,na,5.95,3.61, 7.22,2

Lisey's Story,na,na,5.95,3.61, 7.22,2

Mr. Mercedes,na,na,6.79,3.87, 7.74,2

Gerald's Game,na,na,5.37,3.43, 6.86,2

Cell,na,na,5.95,3.61, 7.22,2

11.22.63,na,na,8.05,4.26, 8.52,2

The Dark Tower,na,na,7.2,4,8,2

mydata = read.csv("King.csv")

attach(mydata)

p1 <- ggplot(mydata, aes(x=good.doubled, y=imdb)) +

geom_point(colour = factor(clr),shape=1,size=2) + # Use hollow circles

geom_smooth(method=lm,se=FALSE)

p1 <- p1 + ylab("IMDB Ratings")

p1 <- p1 + xlab("GoodReads Ratings")

p1 <- p1 + ggtitle("Upcoming Stephen King Adaptations")

p1 <- p1 + annotate("text", x = 6.97, y = 5.26, label = "The Tommyknockers", size=3, colour="blue3")

p1 <- p1 + annotate("text", x = 7.85, y = 7.42, label = "Carrie", size=3, colour="blue3")

#Salem's Lot,17/10/1975,439,6.8,3.97,7.94,1

p1 <- p1 + annotate("text", x =8.0 , y =6.9, label = "Salem's Lot", size=3, colour="blue3")

p1 <- p1 + annotate("text", x = 7.8, y = 6.5, label = "Pet Sematary", size=3, colour="blue3")

p1 <- p1 + annotate("text", x = 8.34, y = 8.51, label = "The Shining", size=3, colour="blue3")

p1 <- p1 + annotate("text", x = 8.62, y = 8.2, label = "Stand By Me", size=3, colour="blue")

p1 <- p1 + annotate("text", x = 8.9, y = 8.4, label = "The Green Mile", size=3, colour="blue3")

p1 <- p1 + annotate("text", x = 9.0, y = 9.09, label = "Shawshank\nRedemption" , size=3, colour="blue3")

p1 <- p1 + annotate("text", x = 8.75, y = 7.3, label = "The Stand", size=3, colour="blue3")

p1 <- p1 + annotate("text", x = 8.27, y = 6.85 , label = "It", size=3, colour="blue3")

p1 <- p1 + annotate("text", x = 8.19, y = 7.74, label = "Misery", size=3, colour="blue3")

p1 <- p1 + annotate("text", x = 8.05, y = 6.7, label = "Under the Dome", size=3, colour="blue3")

#Under the Dome,10/11/2009,1074,6.8,3.89,7.78,1

p1 <- p1 + annotate("segment", x = 7.9, xend = 7.79, y = 6.7, yend = 6.8, colour = "blue3")

#Dolores Claiborne,Nov-92,305,7.4,3.76,7.52,1

p1 <- p1 + annotate("text", x = 7.5, y = 7.3, label = "Dolores Claiborne", size=3, colour="blue3")

#The Dark Half,20/10/1989,431,5.9,3.71,7.42,1

p1 <- p1 + annotate("text", x = 7.5, y = 5.81, label = "The Dark Half", size=3, colour="blue3")

#Bag of Bones,22/09/1998,529,5.8,3.84,7.68,1

p1 <- p1 + annotate("text", x = 7.78, y = 5.7, label = "Bag of Bones", size=3, colour="blue3")

#The Dead Zone,Aug-79,428,7.3,3.88,7.76,1

p1 <- p1 + annotate("text", x = 7.5, y = 7.72, label = "The Dead Zone", size=3, colour="blue3")

p1 <- p1 + annotate("segment", x = 7.76, xend = 7.5, y = 7.33, yend = 7.66, colour = "blue3")

#The Mist,10/11/2009,230,7.2,3.88,7.76,1

p1 <- p1 + annotate("text", x = 7.76, y = 7.1, label = "The Mist", size=3, colour="blue3")

#Firestarter,29/09/1980,426,6,3.8,7.6,1

p1 <- p1 + annotate("text", x = 7.71, y = 6, label = "Firestarter", size=3, colour="blue3")

#The Langoliers,10/11/2009,230,6.1,3.71,7.42,1

p1 <- p1 + annotate("text", x = 7.56, y = 6.11, label = "The Langoliers", size=3, colour="blue3")

#Cujo,08/09/1981,319,6,3.61,7.22,1

p1 <- p1 + annotate("text", x = 7.23, y = 6.1, label = "Cujo", size=3, colour="blue3")

#The Running Man,May-82,219,6.6,3.74,7.48,1

p1 <- p1 + annotate("text", x = 7.48, y = 6.7, label = "The Running Man", size=3, colour="blue3")

#Christine,29/04/1983,526,6.6,3.69,7.38,1

p1 <- p1 + annotate("text", x = 7.38, y = 6.5, label = "Christine", size=3, colour="blue3")

#Thinner,19/11/1984,309,5.7,3.6,7.2,1

p1 <- p1 + annotate("text", x = 7.25, y = 5.6, label = "Thinner", size=3, colour="blue3")

#Needful Things,Oct-91,690,6.2,3.84,7.68,1

p1 <- p1 + annotate("text", x = 7.83, y = 6.2, label = "Needful Things", size=3, colour="blue3")

#Dreamcatcher,20/03/2001,620,5.5,3.53,7.06,1

p1 <- p1 + annotate("text", x = 7.2, y = 5.5, label = "Dreamcatcher", size=3, colour="blue3")

#The Talisman,na,na,7.52,4.1, 8.2,2

p1 <- p1 + annotate("text", x = 8.36, y = 7.52, label = "The Talisman", size=3, colour="red3")

#Rose Madder,na,na,5.93,3.61, 7.22,2

p1 <- p1 + annotate("text", x = 7.07, y = 5.93, label = "Rose Madder", size=3, colour="red3")

#Lisey's Story,na,na,5.93,3.61, 7.22,2

p1 <- p1 + annotate("text", x = 7.07, y = 6.05, label = "Lisey's Story", size=3, colour="red3")

#Mr. Mercedes,na,na,6.78,3.87, 7.74,2

p1 <- p1 + annotate("text", x = 7.60, y = 6.81, label = "Mr. Mercedes", size=3, colour="red3")

#Gerald's Game,na,na,5.34,3.43, 6.86,2

p1 <- p1 + annotate("text", x = 7.03, y = 5.36, label = "Gerald's Game", size=3, colour="red3")

#Cell,na,na,5.93,3.61, 7.22,2

p1 <- p1 + annotate("text", x = 7.28, y = 5.92, label = "Cell", size=3, colour="red3")

#11.22.63,na,na,8.05,4.26, 8.52,2

p1 <- p1 + annotate("text", x = 8.63, y = 8.05, label = "11.22.63", size=3, colour="red3")

#The Dark Tower,na,na,7.2,4,8,2

p1 <- p1 + annotate("text", x = 8.16, y = 7.2, label = "The Dark Tower", size=3, colour="red3")

p1 <- p1 + ylab("IMDB Ratings")

p1 <- p1 + xlab("GoodReads Ratings")

p1

ggsave("plot.png", width=10, height=10, dpi=100)

detach(mydata)

Friday, November 13, 2015

Do Stephen King's Better Books Make Better Films?

Are Stephen King films better than the books? – https://t.co/HEWQJyPGcc

(definitely a positive correlation) pic.twitter.com/GbS0Xq9s4w

— Max Roser (@MaxCRoser) November 11, 2015

I think Max is right on this. So I made a graph showing how movie and book ratings are correlated

Now the actual correlation figure is

cor(good.doubled, imdb, use="complete")

[1] [1] 0.8766 so it looks like highly rated King books make highly rated films

It could be that a good film makes people read and rate highly a book. But my basic conclusion is Stephen King's highly rated books make higher rated films

Appendix: Code for the Graph

mydata = read.csv("King.csv")

attach(mydata)

cor(good.doubled, imdb, use="complete")

p1 <- ggplot(mydata, aes(x=good.doubled, y=imdb)) +

geom_point(shape=1) + # Use hollow circles

geom_smooth(method=lm, # Add linear regression line

se=FALSE) # Don't add shaded confidence region

p1 <- p1 + ylab("IMDB Ratings")

p1 <- p1 + xlab("GoodReads Ratings *2")

p1 <- p1 + ggtitle("Stephen King: Books vs. Movies Correlation")

p1 <- p1 + geom_text(aes(label=ifelse(good.doubled>0,as.character(Title),'')),hjust=0,just=0,size=2, position = "jitter")

p1

ggsave("correlate.png")

Other Correlations

I changed the publication data column into a column of Years since 1974.

cor(good.doubled, Years, use="complete")

[1] -0.1879052 Not a strong correlation about Kings adapted books get better or worse since he started

> cor(good.doubled, Pages, use="complete")

[1] -0.02715659 no relationship between the length of a book and it being rated highly or lowly.

> cor(Years, Pages, use="complete")

[1] 0.482048 King's adapted books may be getting a bit longer over time

Wednesday, November 11, 2015

Are Stephen King films better than the books?

Rejoice, Snobs: The Book IS Better Than The Movie

The literary originals have higher ratings than the film adaptations 74 percent of the time. This recent piece claims books are usually better than their films versions.

This article reminded me of a claim that Stephen King films are better than his books. So I extracted the ratings from IMDB and Goodreads. I doubled the Goodreads ratings to make them out of 10.

I included miniseries. Carrie was made twice but I only included the original. The Langoliers, Maximum Overdrive, Lawnmower man and Secret garden seem not to have independent English language printed book versions.

The Shining is one of two films with a higher rating than the book. King famously hates the film

"Are you mystified by the cult that's grown around Kubrick's Shining?

I don't get it. But there are a lot of things that I don't get. But obviously people absolutely love it, and they don't understand why I don't. The book is hot, and the movie is cold; the book ends in fire, and the movie in ice."

To make this a fair test films and book would have to be graded on a curve. By what the average rating of each is. There is a good discussion on how to normalise the original comparison here. But I think this graph is enough to show Stephen King books are better than his films.

Less than 8% of King adaptations are rated higher compared to 26% usually for book adaptations. To get King to have the average quality of adaptations, sixish Stephen King Films being rated better than is books. This would require Dolores Claiborne, The Green Mile, Stand By Me, Misery and maybe Carrie to be much worse books or these already highly rated films to be much better. I am going to call this Myth Busted*, Stephen King adaptations are less successful than the average adaptation.

| Title | Publication date | Pages | imdb | goodreads | good doubled |

|---|---|---|---|---|---|

| Carrie | April 5, 1974 | 199 | 7.4 | 3.89 | 7.78 |

| 'Salem's Lot | October 17, 1975 | 439 | 6.8 | 3.97 | 7.94 |

| The Shining | January 28, 1977 | 447 | 8.4 | 4.12 | 8.24 |

| The Stand | Sep-78 | 823 | 7.3 | 4.32 | 8.64 |

| The Dead Zone | Aug-79 | 428 | 7.3 | 3.88 | 7.76 |

| Firestarter | September 29, 1980 | 426 | 6 | 3.8 | 7.6 |

| Cujo | September 8, 1981 | 319 | 6 | 3.61 | 7.22 |

| The Running Man | May-82 | 219 | 6.6 | 3.74 | 7.48 |

| Christine | April 29, 1983 | 526 | 6.6 | 3.69 | 7.38 |

| Pet Sematary | November 14, 1983 | 374 | 6.6 | 3.86 | 7.72 |

| Thinner | November 19, 1984 | 309 | 5.7 | 3.6 | 7.2 |

| It | September 15, 1986 | 1138 | 6.9 | 4.12 | 8.24 |

| Misery | June 8, 1987 | 310 | 7.8 | 4.06 | 8.12 |

| The Tommyknockers | November 10, 1987 | 558 | 5.4 | 3.42 | 6.84 |

| The Dark Half | October 20, 1989 | 431 | 5.9 | 3.71 | 7.42 |

| Needful Things | Oct-91 | 690 | 6.2 | 3.84 | 7.68 |

| Dolores Claiborne | Nov-92 | 305 | 7.4 | 3.76 | 7.52 |

| The Green Mile | March–August 1996 | 400 | 8.5 | 4.39 | 8.78 |

| Bag of Bones | September 22, 1998 | 529 | 5.8 | 3.84 | 7.68 |

| Dreamcatcher | March 20, 2001 | 620 | 5.5 | 3.53 | 7.06 |

| Under the Dome | November 10, 2009 | 1074 | 6.8 | 3.89 | 7.78 |

| Shawshank Redemption | 1982 | 181 | 9.3 | 4.51 | 9.02 |

| Stand by me | 1982 | 80 | 8.1 | 4.25 | 8.5 |

| The Mist | 1983 | 230 | 7.2 | 3.88 | 7.76 |

| Apt Pupil | 1983 | 179 | 6.7 | 3.8 | 7.7 |

| Hearts in Atlantis | 2000 | 640 | 6.9 | 3.77 | 7.54 |

mydata = read.csv("King.csv")

library(ggplot2)

p1 <- ggplot(mydata, aes(x = good.doubled, y = imdb))

p1 <- p1 + geom_abline(intercept=0, slope=1)

p1 <- p1 + geom_point(shape=1)

p1 <- p1 + ylim(5, 10)

p1 <- p1 + xlim(5, 10)

p1 <- p1 + ylab("IMDB Ratings")

p1 <- p1 + xlab("GoodReads Ratings *2")

p1 <- p1 + ggtitle("Stephen King: Books vs. Movies")

p1 <- p1 + annotate("text", x = 7.3, y = 5.3, label = "The Tommyknockers", size=3, colour="blue3")

p1 <- p1 + annotate("text", x = 7.95, y = 7.37, label = "Carrie", size=3, colour="blue3")

p1 <- p1 + annotate("text", x = 8, y = 6.5, label = "Pet Sematary", size=3, colour="blue3")

p1 <- p1 + annotate("text", x = 7.9, y = 8.54, label = "The Shining", size=3, colour="red3")

p1 <- p1 + annotate("text", x = 8.75, y = 9.4, label = "Shawshank Redemption", size=3, colour="red3")

p1 <- p1 + annotate("text", x = 9.18, y = 8.4, label = "The Green Mile", size=3, colour="blue3")

p1 <- p1 + annotate("text", x = 8.72, y = 8, label = "Stand By Me", size=3, colour="blue3")

p1 <- p1 + annotate("text", x = 8.92, y = 7.3, label = "The Stand", size=3, colour="blue3")

p1 <- p1 + annotate("text", x = 8.3, y = 6.85 , label = "It", size=3, colour="blue3")

p1 <- p1 + annotate("text", x = 8.32, y = 7.7, label = "Misery", size=3, colour="blue3")

p1 <- p1 + annotate("text", x = 8.18, y = 6.7, label = "Under the Dome", size=3, colour="blue3")

p1 <- p1 + annotate("text", x = 9.28, y = 5.0, label = "Books Higher Rated", size=5, colour="blue3")

p1 <- p1 + annotate("text", x = 5.7, y = 9.90, label = "Films Higher Rated", size=5, colour="red3")

p1 <- p1 + annotate("text", x = 6.9, y = 6, label = "Cujo", size=3, colour="blue3")

p1 <- p1 + annotate("segment", x = 7.05, xend = 7.2, y = 6.0, yend = 6.0, colour = "blue3")

p1 <- p1 + annotate("text", x = 6.45, y = 5.52, label = "Dreamcatcher", size=3, colour="blue3")

p1 <- p1 + annotate("segment", x = 6.8, xend = 7.05, y = 5.5, yend = 5.5, colour = "blue3")

p1 <- p1 + annotate("text", x = 6.80, y = 7.4, label = "Dolores Claiborne", size=3, colour="blue3")

p1 <- p1 + annotate("segment", x = 7.28, xend = 7.5, y = 7.4, yend = 7.4, colour = "blue3")

p1 <- p1 + annotate("text", x = 7.92, y = 5.72, label = "The Dark Half", size=3, colour="blue3")

p1

ggsave("plot.png")

Sunday, April 19, 2015

England's Temperature in 2014

According to wikipedia, last year was the hottest year in this dataset with an average temperature of 10.94 °C.

I downloaded this Hadley Centre dataset. And I followed this tutorial. Based on an original graphic by Tufte. The picture is similar to the one from my post on Dublin's 2014 Weather.

Looking at the black line that represents last years temperatures it was the winter and autumn that were far above average. Instead of a scorching hot summer the three record hot days were in October and December. No day in 2014 was the coldest for that date in the recorded time.

The wikipedia page on this dataset shows the average temperature of this dataset rising over time

Saturday, February 28, 2015

What Colour are Books?

Colours Used

I counted up the occurrence of the

colours = ["red","orange","yellow","green","blue","purple","pink","brown","black","gray","white", "grey"]

in Ulysses by James Joyce. I'll post the word count code soon

red 113, orange 12, yellow 50, green 98, blue 82, purple 17, pink 21, brown 59, black 146, gray 2, white 163, grey 68

Turned this count into a barchart with r package ggplot2 graphing package

library(ggplot2)

df <- data.frame(colours = factor(c("pink","red","orange","yellow","green","blue","purple", "brown", "black", "white", "grey"), levels=c("pink","red","orange","yellow","green","blue","purple","brown", "black", "white", "grey")),

total_counts = c(21.0, 113.0,12.0, 50.0, 98.0, 82.0, 17.0, 59.0, 146.0,163.0,70.0))

colrs = factor(c("pink","red","orange","yellow","green","blue","purple", "brown", "black", "white", "grey"))

bp <- ggplot(data=df, aes(x=colours, y=total_counts)) + geom_bar(stat="identity",fill=colrs)+guides(fill=FALSE)

bp + theme(axis.title.x = element_blank(), axis.title.y = element_blank())+ ggtitle("Ulysses Color Counts")

bp

There is a huge element of unweaving the rainbow in just counting the times a colour is mentioned in a book. The program distills “The sea, the snotgreen sea, the scrotumtightening sea.” into a single number. Still I think the ability to quickly look at the colour palette of a book is interesting.

The same picture made from the colours in Anna Karenina by Leo Tolstoy, Translated by Constance Garnett

Translations

Translations produce really funny graphs with this method. According to Jenks@GreekMythComix the ancient Greeks did not really use colours in the same abstract way we did. Things were not 'orange' so much as 'the colour of an orange'. The counts in the Alexander Pope translation of the Iliad are

red 36, yellow 11, green 16, blue 9, purple 43, brown 4, black 69, gray 1, white 25, grey 6

Because colours are not really mentioned in the original Iliad these sorts of graphs could be a quick way to compare translations. Google book trends does not seem to show increased use of these colours overtime.

Sunday, February 22, 2015

2014 Weather Visualizations

Met Eireann have datasets going back to 1985 on their website here. Some basic data munging on the Met Eireann set for Dublin Airport and I followed the rstats code from the tutorial above to build the graphs below. Wexford would be more interesting for Sun and Kerry for Rain and Wind but those datasets would not download for me.

The first is a comparison of the temperature in 2014 compared to the same date in other years.

Next I looked at average wind speed

And finally the number of hours of sun

These visualizations doesn't look like 2014 was a particularly unusual year for Irish weather. With 30 years of past data if weather was random (which it isn't) at random around 12 days would break the high and low mark for most of these measures. Only the number of sunny days beat this metric. The data met.ie gives contains every day since 1985

maxtp: - Maximum Air Temperature (C)

mintp: - Minimum Air Temperature (C)

rain: - Precipitation Amount (mm)

wdsp: - Mean Wind Speed (knot)

hm: - Highest ten minute mean wind speed (knot)

ddhm: - Mean Wind Direction over 10 minutes at time of highest 10 minute mean (degree)

hg: - Highest Gust (knot)

sun: - Sunshine duration (hours)

dos: - Dept of Snow (cm)

g_rad - Global Radiation (j/cm sq.)

i: - Indicator

Gust might be an interesting one given the storms we had winter 2014. I put big versions of these pictures here, here and here.

Wednesday, February 04, 2015

Irish Alcohol Consumption in 2020

What would Irish alcohol consumption be if current trends continue? Knowing this the effectiveness of new measures can be estimated.

The OECD figures are here. I put them in a .csv here.The WHO figures for alcohol consumption are here I loaded the data in R Package

datavar <- read.csv("OECDAlco.csv")

attach(datavar)

plot(Date,Value,

main="Ireland Alcohol Consumption")

Which looks like this

Looking at that graph alcohol consumption rose from the first year we have data for 1960 until about 2000 and then started dropping. So if the trend since 2000 continued what would alcohol consumption be in 2020?

'Irish people still drink an annual 11.6 litres' I would like to see the source for this figure. We drank 11.6 litres in 2012 according to the OECD. I cannot find OECD figures for 2014. In 2004 we drank 13.6L the claimed 20pc reduction of this is 10.9L, not 11.6L. Whereas the 14.3L we drank in 2002 with a 20pc reduction would now be 11.4. This means it really looks to me like the Independent were measuring alcohol usage up to 2012.

Taking the data since 2000 until 2012.

newdata <- datavar[ which(datavar$Date > 1999), ]

detach(datavar)

attach(newdata)

plot(Date,Value,

main="Ireland Alcohol Consumption")

cor(Date,Value)

The correlation between year and alcohol consumption since 2000 is [1] -0.9274126. It look like there is a close relationship between the year and the amount of alcohol consumed in that time. Picking 2000, near the peak of alcohol consumption, as the starting date for analysis is arguable. But 2002 was the start of this visible trend in reduced alcohol consumption.

Now I ran a linear regression to predict based on this data alcohol consumption in 2015 and 2020.

> linearModelVar <- lm(Value ~ Date, newdata) > linearModelVar$coefficients[[2]]*2015+linearModelVar$coefficients[[1]] [1] 10.42143 > linearModelVar$coefficients[[2]]*2020+linearModelVar$coefficients[[1]] [1] 9.023077 >This means based on data from 2000-2012 we would expect people to drink 10.4 litres this year. Reducing to drinking 9 litres in 2020. So with current trends Irish alcohol consumption will be lower than 'the aim is to bring down Ireland's consumption of alcohol to the OECD average of 9.1 litres in five years'.

There could be something else that is going to alter the trend. One obvious one would be a glut of young adults. People in their 20 drink more than older people. If there are a higher proportion of youths about then the alcohol consumption will rise all else being equal. So will there be a higher proportion of people in their 20s in 5 years time?

The population pyramids projections for Ireland are here. Looking at these there seems to have been a higher proportion of young adults in 2010 than there will be in 2020 which would imply lower alcohol consumption

it would be interesting to see the data and the model that the prediction of Irish alcohol consumption are based on. And to see how minimum alcohol pricing changes the results of these models. But without seeing those models it looks like the Government strategy is promising current trends to continue in response to a new law.

Sunday, December 30, 2012

World Cup 2010 Heatmap

library(RColorBrewer)

#save the guardian data to world.csv and load it

players2<-read.csv('World.csv', sep=',', header=TRUE)

players2[1:3,]

#players with the same name (like Torres) meant I had to merge surnames and countries

players2$Name <-paste(players2[,1], players2[,2])

rownames(players2) <- players2$Name

###I removed one player by hand

###I now do not need these columns

players2$Position <- NULL

players2$Player.Surname <- NULL

players2$Team <- NULL

players3 <-players2[order(players2$Total.Passes, decreasing=TRUE),]

### or to order by time played

###players3 <-players2[order(players2$Time.Played, decreasing=TRUE),]

players3 <- players3[,1:5]

players4<-players3[1:50,]

players_matrix <-data.matrix(players4)

###change names of columns to make graph readable

colnames(players_matrix )[1] <- "played"

colnames(players_matrix )[2] <- "shots"

colnames(players_matrix )[3] <- "passes"

colnames(players_matrix )[4] <- "tackles"

colnames(players_matrix )[5] <- "saves"

players_heatmap <- heatmap(players_matrix, Rowv=NA, Colv=NA, col = brewer.pal(9, 'Blues'), scale='column', margins=c(5,10), main="World Cup 2010")

dev.print(file="SoccerPassed.jpeg", device=jpeg, width=600)

#players_heatmap <- heatmap(players_matrix, Rowv=NA, Colv=NA, col = brewer.pal(9, 'Greens'), scale='column', margins=c(5,10), main="World Cup 2010")

#dev.print(file="SoccerPlayed.jpeg", device=jpeg, width=600)

dev.off()

Nothing very fancy here. Just showing that with a good data source and some online tutorials it is easy enough to knock up a picture in a fairly short time.

Monday, December 24, 2012

The Price Of Guinness

When money's tight and hard to get And your horse has also ran, When all you have is a heap of debt - A PINT OF PLAIN IS YOUR ONLY MAN.Myles Na Gopaleen

How much has Guinness increased in price over time? Below is a graph of the price changes. The data is taken from a combination of the Guinness price index and CSO data

The R package code for this graph is below.

pint<-read.csv('pintindex.csv', sep=',', header=TRUE)

plot(pint$Year, pint$Euros, type="s", main="Price Pint of Guinness in Euros", xlab="Year", ylab="Price Euros", bty="n", lwd=2)

dev.print(file="Guinness.jpeg", device=jpeg, width=600)

dev.off()

Paul in the comments asked a good question. How does this compare to earnings?

price Earnings/Price Earnings per Week (Euro) 2008 4.22 167.31 706.03 2009 4.34 161.69 701.73 2010 4.2 165.02 693.08 2011 4.15 165.81 688.11 2012 4.23 163.56 691.87Here the earnings are average weekly earnings which is the modern and slightly different value to average industrial wage which the Pint Index used. It shows that even with a price drop in Guinness the total purchasing power of pints with wages decreased. This is based on gross wages increases in tax probably made the situation based on net wages worse.

Pintindex.csv is

Year, Euros 1969, 0.2 1973, 0.24 1976, 0.48 1979, 0.7 1983, 1.37 1984, 1.48 1985, 1.52 1986, 1.64 1987, 1.73 1988, 1.8 1989, 1.87 1990, 1.93 1991, 2.02 1992, 2.15 1993, 2.24 1994, 2.34 1995, 2.42 1996, 2.5 1997, 2.52 1998, 2.65 1999, 2.74 2000, 2.88 2001, 3.01 2002, 3.24 2003, 3.41 2004, 3.54 2005, 3.63 2006, 3.74 2007, 4.03 2008, 4.22 2009, 4.34 2010, 4.2 2011, 4.15 2012, 4.23

Wednesday, December 19, 2012

Cystic Fibrosis Improved Screening

Currently there is free screening for people who has relatives with CF and their partners. I assume they include second cousin as a relative. Based on this paper and some consanguinity calculations I calculate that an Irish couple with one of their second cousins has CF have about twice the chance of having a child with CF as the general population. This means you can be tested for free currently if you have about a 1 in 700 chance of having a child with cystic fibrosis whereas the general population with a 1 in 1444 chance. If a test can be focused the test so that it is twice as good as random screening that should be enough by current standards to be rolled out.

How could a non random screening be made this focused?

1. Geographic area. Some areas of the country might be more likely to have CF carriers than others. Targeting screening in these areas might make it twice as effective. The Cystic Fibrosis Registry of Ireland annual report 2010 gives numbers for Irish counties. 4 counties do not have their numbers listed but I have estimated these based on their population.

This map is based on the figures of people with CF found in the registry. This could be a biased sample or people could have moved. A better measure would be babies born with CF in each county.Number of people with CF in each county might be useful for deciding how to allocate some treatment resources. What % of people have CF is more interesting for screening though. To work this out we first need the numbers found in each county.

The number of people with CF in the registry per ten thousand people is

I can send anyone who wants them full sized versions of these maps or the r package code I used to generate them. The code I used is below

library(RColorBrewer)

library(sp)

con <- url("http://gadm.org/data/rda/IRL_adm1.RData")

close(con)

people<-read.csv('cases.csv', sep=',', header=TRUE)

pops = cut(people$cases,breaks=c(0,2,10,20,30,40,50,70,150,300))

myPalette<-brewer.pal(9,"Purples")

spplot(gadm, "pops", col.regions=myPalette, main="Cystic Fibrosis Cases Per County",

lwd=.4, col="black")

dev.print(file="CFIrl.jpeg", device=jpeg, width=600)

dev.off()

population<-read.csv('countypopths.csv', sep=',', header=TRUE)

pops = cut(population$population,breaks=c(0,20,40,60,70,80,100,160,400,1300))

myPalette<-brewer.pal(9,"Greens")

spplot(gadm, "pops", col.regions=myPalette, main="Population in thousands",

lwd=.4, col="black")

dev.print(file="PopIrl.jpeg", device=jpeg, width=600)

dev.off()

gadm$cfpop <- people$cases/(population$population/10)

cfpop = cut(gadm$cfpop,breaks=c(0,0.5,1,1.5,2,2.5,3,3.5))

gadm$cfpop <- as.factor(cfpop)

myPalette<-brewer.pal(7,"Blues")

spplot(gadm, "cfpop", col.regions=myPalette, main="CF/Population Irish Counties",

lwd=.4, col="black")

dev.print(file="CFperPopIrl.jpeg", device=jpeg, width=600)

dev.off()

If this result was replicated in a more complete analysis just picking the darker counties could get you the two times amplification needed to have a test as strong as the currently paid for ones.

2. Pick certain ethnic minorities. Some groups have higher levels of CF than the average population. For example travellers have higher levels of some disorders. 'disorders, including Phenylketonuria and Cystic fibrosis, that are found in virtually all Irish communities and probably are no more common among Travellers than in the general Irish population. The second are disorders, including Galactosaemia, Glutaric Acidaemia Type I, Hurler’s Syndrome, Fanconi’s Anaemia and Type II/III Osteogenesis Imperfecta, that are found at much higher frequencies in the Traveller community than the general Irish population'. 'There is no proactive screening of the Traveller population no more than there is proactive screening of the non-traveller Irish population'. I do not think deliberate screening of one ethnic group, unless that group themselves organise it, is a good idea. Singling out one ethnic group for screening risks stigmatising its members and reminds many of the horror of eugenics.

3. Certain disorders seem to cluster with CF. 'In 1936, Guido Fanconi published a paper describing a connection between celiac disease, cystic fibrosis of the pancreas, and bronchiectasis'. Ireland also has the highest rate of celiac disease in the world (about 1 in 100). If CF and celiac disease or some other observable characteristic are also correlated in Ireland testing people with celiac disease in their family could also provide amplification of a test.

4. Screening parents undergoing IVF. HARI was the first clinic in Ireland to offer IVF and it currently receives up to 800 enquiries a year specifically about the procedure. It carries out over 1,350 cycles of IVF treatment annually and over 3,500 babies have been born as a result. The Merrion Clinic carries out up to 500 cycles of IVF per year, while last year, SIMS carried out 1,063 cycles." IVf is roughly 33% effective per cycle so this means about 1000 children are born through IVF from these three Irish clinics here each year. Screening of these parents would prevent roughly one CF case per year. Screening people who use IVF does not prevent many cases. It can be used by people who know they are CF carriers to avoid having a child with CF though.

Concerns about the privacy and security of a general genetic screening program of the Irish population should not be ignored. Cathal Garvey on twitter pointed out that this screening would require 'With explicit informed consent & ensuing destruction of samples, Just wary of prior shenanigans of HSE bloodspot program. i.e. it's already fashionable among governments to abuse screening programs to create 'law' enforcement databases. Without clear guarantees against that, must weigh the costs of mass DNA false incriminations vs. gains of ntnl screening prog!' I agree that any genetic screening program for Ireland would have to ensure privacy for the individual.

Screening the general population for carriers of serious genetic disorders would save money and suffering. If the level of savings are not sufficient for general screening focusing on certain locations or relatives of people who suffer from disorders that co-occur with CF could amplify the returns sufficiently to be as useful as current screenings.